A collaborative pilot effort to host an accessible, highly-efficient computing resource on campus

The High-Throughput computing program is no longer a pilot. Resource information and support can be found on the Research IT Portal HTC page.

Thanks to a collaborative effort among Engineering IT Shared Services, the National Center for Supercomputing Applications (NCSA), Technology Services, and campus researchers, Illinois reached a huge milestone in successfully providing high-throughput computing (HTC) services in Spring 2018. By combining large computing resources with repurposed hardware, specialized software, and researchers from several departments, this HTC pilot effort proves that an accessible, highly-efficient computing resource hosted on campus is within reach.

HTC and the power of many

High-throughput computing is an environment of pooled computing resources that deliver very large amounts of processing capacity over an extended period of time. The “pool” is made up of individual (ie: researcher) or group (ie: computer lab) owners who are contributing their smaller computing power in exchange for the opportunity to use the collective large-capacity processing power of HTC for their research needs. Essentially, HTC breaks up large computational jobs into smaller, easier-to-manage tasks and generates results at record speeds. Specialized software called HTCondor, optimizes and efficiently manages the task scheduling for HTC environments.

Deputy CIO for Research IT, John Towns, has had high-throughput computing on his agenda for a while. But when it came to finding and managing the needed resources for an HTC service, the funding for a support system wasn’t in place. It would take a little creativity and a lot of collaboration to drive the project forward.

Building the HTC system

Ultimately, the plan for building this service was to pool together resources that already existed on campus and then build upon that foundation with the necessary hardware and software requirements. Ideally, said Towns, we will be able to “offer [HTC] as a free service to researchers in units that, in turn, add workstation, lab, and similar resources to the pool of resources supporting the service.”

Tim Boerner, Assistant Director of ICCP Operations, explained that it is not as easy as it sounds. “It takes a lot of coordination and work to get volunteers who can commit their time to make this to happen.” Hardware can also be hard to come by when resources are low.

Fortunately, in June 2017, the Campus Cluster* was set to retire 440 compute servers (processing hardware). “So we removed failing components and reintegrated the hardware into useable HTC resources,” said Boerner, “and used it to jump start the HTC pilot in the summer of 2017.”

By the start of the 2017 Fall semester, the team had compiled most of the necessary components for an HTC service, but without funding and human resources, getting the pilot up and running was proving to be a challenge.

A true collaborative resource

While HTC is an important and complicated story about a technological advancement, it is also about a major collaborative human effort that is paying off in a huge way for our campus and the future of campus research. In fact, the biggest challenge was organizing the right people to execute the needed functions.

After some negotiations, the management in Engineering IT, NCSA, and Technology Services agreed to dedicate a percentage of staff time to the HTC effort. “Old hardware and servers were salvaged and put together by volunteers who have other jobs,” said Boerner of the process, “and it is now doing important science on a global scale.”

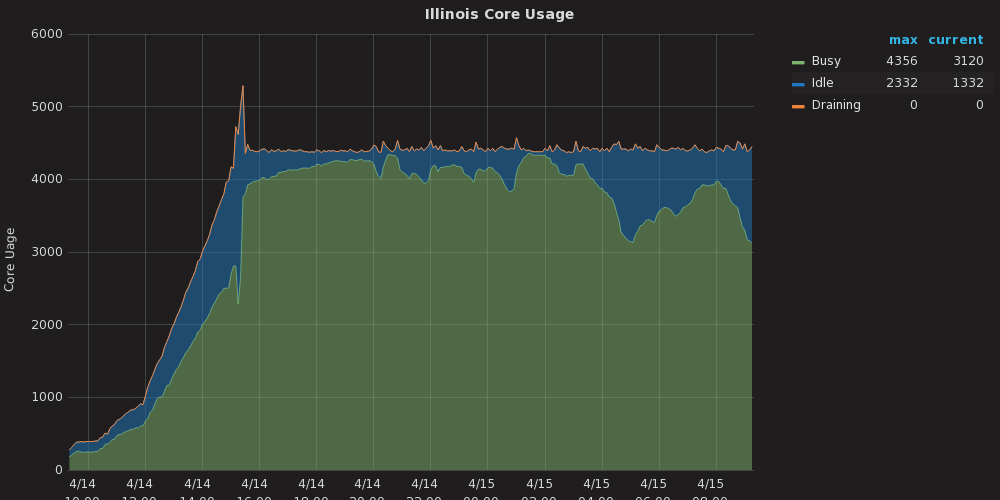

The combined efforts of these volunteers to acquire and repurpose the essential hardware, and configure the HTCondor software, finally led to the integration of HTC compute nodes (processing networks) into a science workflow. During one weekend in April 2018, the U.S. ATLAS Midwest Tier-2** research team, one of the HTC Pilot Effort’s “friendly users”, was able to incorporate the HTC pilot resource into their science workflows to do some very large (4000 cores) and fast computing jobs that will advance current research being done on campus! (See Figure A)

What’s next?

Looking ahead, the challenges include how to provide both funding and staffing. As more researchers use HTC, the need to grow and support this resource will only increase.

The team continues to develop a plan for increasing the size of the computing resource pool. “Meanwhile,” said Towns, “we are looking toward the initial release of the [HTC] service over the summer, and working with various units that are interested in joining this service through the remainder of 2018.” In other words, the system will continue to grow in computing resources and will therefore, increase its workload capabilities and hopefully help more researchers get the results they need.

For more information about participating in the high-throughput computing pilot, or the overall effort, email research-it@illinois.edu.

*The Campus Cluster, a High Performance Computing (HPC) resource housed in the Advance Computation Building, had been used by many researchers to compute incredibly large processing jobs. What is the difference between HTC and HPC? An HPC system is designed to enable many compute servers to work together on a single job. It takes related data and processes it in a devised order. Although this is a fantastic resource (it’s like a pieced-together supercomputer!), it does not maximize all that processing power in the most efficient way. HTC can break up the data, process portions of it independently, and reassemble the results. It is a highly efficient way to compute large amounts of data that don’t need to be processed in a particular order.

**The U.S. ATLAS Midwest Tier-2 (MWT2) is a consortium of the University of Chicago, University of Illinois at Urbana-Champaign, and Indiana University. This team contributes resources to advance experimental particle physics at the Large Hadron Collider (LHC), based at the CERN Laboratory in Geneva, Switzerland.